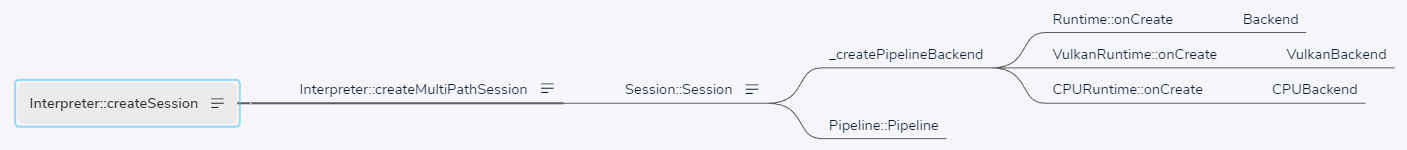

MNN createSession 之创建流水线后端(四)

系列文章目录

MNN createFromBuffer(一)

MNN createRuntime(二)

MNN createSession 之 Schedule(三)

MNN createSession 之创建流水线后端(四)

MNN Session 之维度计算(五)

MNN Session 之几何计算(六)

MNN Session 之 CPU 算子(七)

MNN Session 之 Vulkan 算子(八)

MNN 执行推理(九)

文章目录

- 系列文章目录

- 1、createSession

- 1.1 createMultiPathSession

- 1.1.1 Session 类 ModeGroup

- 1.1.2 Session::Session

- 1.1.2.1 _createPipelineBackend

- 1.1.2.1.1 VulkanRuntime::onCreate

- 1.1.2.1.1.1 VulkanBackend::VulkanBackend

- 1.1.2.1.2 CPURuntime::onCreate

- 1.1.2.1.2.1 CPUBackend::CPUBackend

- 1.1.2.2 Pipeline 类 TuningAttr、UnitInfo

- 1.1.2.3 Pipeline::Pipeline

- 1.1.2.3.1 GeometryComputer::Context

1、createSession

依据 ScheduleConfig 和 RuntimeInfo 创建会话。

// source/core/Interpreter.cpp Session* Interpreter::createSession(const ScheduleConfig& config, const RuntimeInfo& runtime) { return createMultiPathSession({config}, runtime); }1.1 createMultiPathSession

// source/core/Interpreter.cpp Session* Interpreter::createMultiPathSession(const std::vector& configs, const RuntimeInfo& runtime) { // ... auto newSession = std::unique_ptr(new Session(std::move(info), mNet->modes, std::move(rt))); if (!newSession->valid()) { MNN_PRINT("Invalide Session!!\n"); return nullptr; } auto result = newSession.get(); auto validForResize = info.validForResize; if (validForResize && mNet->modes.inputMode == Session_Input_Inside && mNet->modes.resizeMode == Session_Resize_Direct) { result->resize(); } if ((!mNet->cacheFile.empty()) && (!valid) && mNet->modes.backendMode == Session_Backend_Fix) { // Try to save extra cache auto buffer = result->getCache(); if (buffer.first != nullptr && buffer.second > 0) { MNN_PRINT("Write cache to %s, size = %zu\n", mNet->cacheFile.c_str(), buffer.second); writeCacheFile(mNet, buffer); mNet->lastCacheSize = buffer.second; // Write Cache cacheMode = cacheMode | 2; } } // Reset cache result->loadCache(nullptr, 0); mNet->sessions.emplace_back(std::move(newSession)); #ifdef MNN_INTERNAL_ENABLED int precision = BackendConfig::Precision_Normal; if (nullptr != configs[0].backendConfig) { precision = configs[0].backendConfig->precision; } int mode = configs[0].mode; mNet->sessionInfo.insert(std::make_pair(result, std::make_tuple(precision, mode))); if (shouldLog(FREQ_HIGH)) { std::map metrics = mNet->basicLogginData; metrics.emplace("UUID", mNet->uuid); metrics.emplace("Time", std::to_string((float)_timer.durationInUs() / 1024.0f)); metrics.emplace("Backend", std::to_string(configs[0].type)); metrics.emplace("Precision", std::to_string(precision)); metrics.emplace("Mode", std::to_string(mode)); metrics.emplace("Cache", std::to_string(cacheMode)); metrics.emplace("CacheSize", std::to_string((float)(mNet->lastCacheSize / 1024.0f))); metrics.emplace("ModelSize", std::to_string ((float)mNet->buffer.size() / 1024.0f / 1024.0f)); metrics.emplace("Usage", std::to_string((int) mNet->net->usage())); metrics.emplace("API", "Interpreter::createMultiPathSession"); logAsync(metrics); } #endif // MNN_INTERNAL_ENABLED return result; }1.1.1 Session 类 ModeGroup

// source/core/Session.hpp class MNN_PUBLIC Session { public: struct ModeGroup { Interpreter::SessionMode callBackMode = Interpreter::Session_Debug; Interpreter::SessionMode inputMode = Interpreter::Session_Input_Inside; Interpreter::SessionMode outputMode = Interpreter::Session_Output_Inside; Interpreter::SessionMode backendMode = Interpreter::Session_Backend_Fix; Interpreter::SessionMode resizeMode = Interpreter::Session_Resize_Direct; Interpreter::SessionMode memoryUsageMode = Interpreter::Session_Memory_Collect; Interpreter::SessionMode codegenMode = Interpreter::Session_Codegen_Disable; int memoryAllocatorType = 0; int maxTuningNumber = MNN_DEFAULT_TUNING_NUMBER; }; Session(Schedule::ScheduleInfo&& info, const ModeGroup& mode, RuntimeInfo&& runtime); ~Session(); Session* clone(RuntimeInfo&& runtime, std::shared_ptr sharedConst); public: /** * @brief infer. * @return result code. */ ErrorCode run() const; /** * @brief infer with callbacks and sync option. * @param enterCallback callback before each op. * @param exitCallback callback after each op. * @param sync wait until all ops done before return or not. * @return result code. */ ErrorCode runWithCallBack(const TensorCallBackWithInfo& enterCallback, const TensorCallBackWithInfo& exitCallback, bool sync = false) const; bool getInfo(Interpreter::SessionInfoCode code, void* ptr) const; public: /** * @brief resize tensors and buffers responding to input changes. * @return result code. */ ErrorCode resize(); /** * @brief set if needs resize. * @param flag needs resize or not. */ void setNeedResize(bool flag = true) { mNeedResize = flag; } void setNeedMalloc(bool flag = true) { mNeedMalloc = flag; } Runtime* getCPURuntime() { return mRuntime.second.get(); } public: /** * @brief get backend that create the tensor. * @param tensor given tensor. * @return backend that create the tensor, NULL if the tensor is created by default backend (CPU backend). */ const Backend* getBackEnd(const Tensor* tensor) const; /** * @brief get input tensor for given op name. * @param name given op name. if NULL, return first input tensor. * @return input tensor if found, NULL otherwise. */ Tensor* getInput(const char* name) const; /** * @brief get output tensor for given op name. * @param name given op name. if NULL, return first output tensor. * @return output tensor if found, NULL otherwise. */ Tensor* getOutput(const char* name) const; /** * @brief get output tensors map. * @return get output tensors map. */ const std::map& getOutputAll() const; const std::map& getInputAll() const; /** * @brief check session is valid or not. * @return session is valid or not. */ inline bool valid() const { return mValid; } /** * @brief update the session's const value to origin model's const blob. * @return errorcode */ ErrorCode updateToModel(Net* net) const; void waitAsyncResize(); bool hasAsyncWork(); bool loadCache(const void* buffer, size_t size); std::pair getCache(); Tensor* getTensor(int index) const; Schedule::PipelineInfo& getPipelineInfo(int index) const; protected: const std::vector& getPipelines() const { return this->mPipelines; } private: void _clearCache(); void _setUpTensorInfo(const Schedule::ScheduleInfo& info); private: RuntimeInfo mRuntime; std::vector mPipelines; bool mNeedResize = true; bool mValid = true; bool mNeedMalloc = true; Interpreter::SessionMode mCallBackMode; Interpreter::SessionMode mMemoryUsageMode; Interpreter::SessionMode mCodegenMode; Schedule::ScheduleInfo mInfo; ModeGroup mMode; };1.1.2 Session::Session

// source/core/Session.cpp Session::Session(Schedule::ScheduleInfo&& info, const ModeGroup& mode, RuntimeInfo&& runtime) { mMode = mode; mRuntime = std::move(runtime); if (info.pipelineInfo.empty()) { mValid = false; return; } mInfo = std::move(info); for (auto& iter : mInfo.pipelineInfo) { _createPipelineBackend(iter, mRuntime); Pipeline::TuningAttr attr; attr.maxTuningNumber = mode.maxTuningNumber; attr.autoSetOpType = mode.backendMode == Interpreter::Session_Backend_Auto; auto rt = mRuntime.first.find(iter.first.info.type)->second.get(); auto cpuRuntime = mRuntime.second; std::shared_ptr newPipeline(new Pipeline(std::move(iter), mode.inputMode == Interpreter::Session_Input_Inside, mode.outputMode == Interpreter::Session_Output_User, attr, rt, cpuRuntime.get())); mPipelines.emplace_back(std::move(newPipeline)); } mCallBackMode = mode.callBackMode; mMemoryUsageMode = mode.memoryUsageMode; mCodegenMode = mode.codegenMode; }1.1.2.1 _createPipelineBackend

创建流水线后端。BackendCache

// source/core/Session.cpp // typedef std::pair PipelineInfo; // // struct BackendCache { // Backend::Info info; // BackendConfig config; // std::pair cache; // bool needComputeShape = true; // bool needComputeGeometry = true; // bool reportError = true; // std::map inputTensorCopyCache; // }; // // typedef std::pair RuntimeInfo; // static void _createPipelineBackend(Schedule::PipelineInfo& iter, RuntimeInfo& runtime) { // iter.first 类型为 struct BackendCache if (iter.first.cache.first != nullptr) { return; } // runtime.first 类型为 std::map // 根据 MNNForwardType(MNN_FORWARD_VULKAN) 获取对应的 Runtime(VulkanRuntime) auto rt = runtime.first.find(iter.first.info.type)->second.get(); // runtime.second 为默认 Runtime(CPURuntime) auto cpuRuntime = runtime.second; bool specialUsage = false; if (iter.first.info.user != nullptr) { specialUsage = iter.first.info.user->flags > 0; } // 此处运行 VulkanRuntime::onCreate,创建对应的 Backend(VulkanBackend) // iter.first.cache 类型为 std::pair iter.first.cache.first.reset(rt->onCreate(iter.first.info.user)); std::shared_ptr second; if (iter.first.cache.first->type() == MNN_FORWARD_CPU && (!specialUsage)) { iter.first.cache.second = iter.first.cache.first; } else { // Const Backend shouldn't be used as default backend // The session may be schedule multi-thread but const backend is the same // We need create a new backend to do size compute / not support op compute // 创建默认的 Backend(CPUBackend) BackendConfig defaultConfig; defaultConfig.flags = 4; iter.first.cache.second.reset(cpuRuntime->onCreate(&defaultConfig)); } }1.1.2.1.1 VulkanRuntime::onCreate

// source/backend/vulkan/runtime/VulkanRuntime.cpp Backend* VulkanRuntime::onCreate(const BackendConfig* config) const { // FIXME: Use config return new VulkanBackend(this, mInfo); }1.1.2.1.1.1 VulkanBackend::VulkanBackend

// source/backend/vulkan/image/backend/VulkanBackend.cpp VulkanBackend::VulkanBackend(const VulkanRuntime* runtime, const Backend::Info& info) : Backend(MNN_FORWARD_VULKAN) { mRuntime = runtime; mDirect = Backend::Info::INDIRECT != info.mode; mDynamicMemoryPool.reset(new VulkanMemoryPool(runtime->mMemoryPool.get())); auto& dev = device(); mFence = std::make_shared(dev); if (!mDirect) { mCmdBuffer.reset(runtime->mCmdPool->allocBuffer()); } mInitBuffer.reset(runtime->mCmdPool->allocBuffer()); }1.1.2.1.2 CPURuntime::onCreate

// source/backend/cpu/CPUBackend.cpp Backend* CPURuntime::onCreate(const BackendConfig* config) const { auto precision = mPrecision; auto memory = mMemory; size_t flags = mFlags; if (nullptr != config) { precision = config->precision; flags = config->flags; memory = config->memory; } #ifdef LOG_VERBOSE MNN_PRINT("cpu backend was created by runtime:%p\n", this); #endif #ifdef MNN_USE_ARMV82 auto core = MNNGetCoreFunctions(); if (core->supportFp16arith && precision == BackendConfig::Precision_Low) { return new Arm82Backend(this, memory); } #endif #ifdef MNN_SUPPORT_BF16 if (precision == BackendConfig::Precision_Low_BF16 && BF16Functions::get()) { return new BF16Backend(this); } #endif if (flags == MNN_CPU_USE_DEFAULT_BACKEND) { return new CPUBackend(this, precision, memory, MNN_FORWARD_CPU, 0); } #ifdef MNN_USE_SSE if (AVX2Backend::isValid()) { return new AVX2Backend(this, memory, flags); } #endif return new CPUBackend(this, precision, memory, MNN_FORWARD_CPU, flags); }1.1.2.1.2.1 CPUBackend::CPUBackend

// source/backend/cpu/CPUBackend.cpp CPUBackend::CPUBackend(const CPURuntime* runtime, BackendConfig::PrecisionMode precision, BackendConfig::MemoryMode memory, MNNForwardType type, size_t flags) : Backend(type) { #ifdef LOG_VERBOSE MNN_PRINT("cpu backend create\n"); #endif mMemory = memory; mRuntime = const_cast(runtime); std::shared_ptr defaultAlloc(BufferAllocator::Allocator::createRecurse(runtime->mStaticAllocator.get())); if (mRuntime->getAllocatorType() == Runtime::Allocator_Defer) { mDynamicAllocator.reset(new DeferBufferAllocator(defaultAlloc)); } else { mDynamicAllocator.reset(new EagerBufferAllocator(defaultAlloc)); } mStaticAllocator = runtime->mStaticAllocator; mPrecisionMode = precision; mCoreFunctions = MNNGetCoreFunctions(); mInt8CoreFunctions = MNNGetInt8CoreFunctions(); mCache = new CPUResizeCache; }1.1.2.2 Pipeline 类 TuningAttr、UnitInfo

// source/core/Pipeline.hpp /** pipeline. one session may contains multiple pipeline, and one pipeline may contains more than one unit. */ class Pipeline : public NonCopyable { public: struct TuningAttr { bool autoSetOpType; int maxTuningNumber; }; Pipeline(Schedule::PipelineInfo&& info, bool allocInput, bool outputStatic, const TuningAttr& tune, const Runtime* rt, const Runtime* cpuRt); ~Pipeline(); class UnitInfo : public OperatorInfo { public: UnitInfo() = default; virtual ~UnitInfo() = default; void setUp(const Command& cmd, int index, const Op* originOp, int totalIndex); }; public: /** encode : 1. compute shape for every op's inputs and outputs; 2. geometry transform; 3. copy op, inputs and outputs tensor info to mBuffer static_model: 3; dynamic_model: 1,2,3 */ ErrorCode encode(bool supportDebug = false, bool permitCodegen = false); /** allocMemory: create Execution and alloc memory for every op */ ErrorCode allocMemory(bool firstMalloc, bool permitCodegen); /** execute this pipline */ ErrorCode execute(); ErrorCode executeCallBack(const TensorCallBackWithInfo& before, const TensorCallBackWithInfo& after); Schedule::PipelineInfo& getPipelineInfo() { return mInfo; } float flops() const { return mFlops; } friend class Session; MNNForwardType getMainForwardType() const { return mInfo.first.cache.first->type(); } private: void _copyInputs(); void _pushTuningTask(std::vector&& initInfos); void _recycleDynamicMemory(Command* command); Schedule::PipelineInfo mInfo; bool mAllocInput; bool mOutputStatic; TuningAttr mTuneAttr; float mFlops = 0.0f; bool mIsQuantModel = false; // For gpu or other backend std::map mCacheConstTensors; std::map mShapeFixConstCache; #ifndef MNN_BUILD_MINI GeometryComputer::Context mContext; Runtime::CompilerType mUseGeometry; #endif const Runtime* mRuntime; const Runtime* mCpuRuntime; };1.1.2.3 Pipeline::Pipeline

OpCacheInfo

// source/core/Pipeline.cpp // typedef std::pair PipelineInfo; // // /** pipeline info */ // struct OpCacheInfo { // /** op */ // const Op* op; // /** input tensors */ // std::vector inputs; // /** output tensors */ // std::vector outputs; // /** schedule type*/ // Schedule::Type type = Schedule::Type::SEPARATE; // // /**Command buffer for cache*/ // CommandBuffer cacheBuffer; // // /**Command buffer for execute*/ // CommandBuffer executeBuffer; // // std::map executionCache; // }; // Pipeline::Pipeline(Schedule::PipelineInfo&& info, bool allocInput, bool outputStatic, const TuningAttr& tune, const Runtime* rt, const Runtime* cpuRt) #ifndef MNN_BUILD_MINI // mContext 类型为 GeometryComputer::Context : mContext(info.first.cache.second, info.first.cache.first->type(), info.first.info.user ? info.first.info.user->precision : BackendConfig::Precision_Normal), mUseGeometry(rt->onGetCompilerType()) { #else { #endif rt->onCheckInfo(info.first.info); mRuntime = rt; mCpuRuntime = cpuRt; mTuneAttr = tune; mAllocInput = allocInput; mOutputStatic = outputStatic; mInfo = std::move(info); mIsQuantModel = false; // mInfo.second 类型为 std::vector for (auto& iter : mInfo.second) { for (auto t : iter.outputs) { if (TensorUtils::getDescribe(t)->quantAttr.get() != nullptr) { // 是否是量化模型 mIsQuantModel = true; break; } } for (auto t : iter.inputs) { if (TensorUtils::getDescribe(t)->quantAttr.get() != nullptr) { mIsQuantModel = true; break; } } if (mIsQuantModel) { break; } } }1.1.2.3.1 GeometryComputer::Context

class GeometryComputer { public: virtual ~GeometryComputer() { // Do nothing } class MNN_PUBLIC Context { public: Context(std::shared_ptr allocBackend, MNNForwardType type = MNN_FORWARD_CPU, BackendConfig::PrecisionMode precision = BackendConfig::Precision_Normal); ~Context(); void clear(); void setBackend(Backend* backend); void getRasterCacheCreateRecursive(Tensor* src, CommandBuffer& cmd); // If has cache, return. Otherwise create cache const std::vector& searchConst(const Op* op); std::shared_ptr allocConst(const Op* key, const std::vector& shape, halide_type_t type, Tensor::DimensionType dimType = Tensor::TENSORFLOW); bool allocTensor(Tensor* tenosr); inline MNNForwardType forwardType() const { return mForwardType; } inline BackendConfig::PrecisionMode precisionType() const { return mPrecision; } void pushCache(const CommandBuffer& buffer); std::shared_ptr mRasterOp; private: void getRasterCacheCreate(Tensor* src, CommandBuffer& cmd); std::map mConstTensors; std::vector mEmpty; std::vector mTempConstTensors; std::shared_ptr mBackend; MNNForwardType mForwardType; BackendConfig::PrecisionMode mPrecision; std::vector mRasterCmdCache; }; static void init(); MNN_PUBLIC static const GeometryComputer* search(int opType, Runtime::CompilerType compType); static void registerGeometryComputer(std::shared_ptr comp, std::vector type, Runtime::CompilerType compType = Runtime::Compiler_Geometry); virtual bool onCompute(const Op* op, const std::vector& inputs, const std::vector& outputs, Context& context, CommandBuffer& cmd) const = 0; virtual bool onRecompute(const Op* op, const std::vector& inputs, const std::vector& outputs, Context& context, CommandBuffer& cmd) const { return false; } };☆

文章版权声明:除非注明,否则均为主机测评原创文章,转载或复制请以超链接形式并注明出处。

还没有评论,来说两句吧...